Redshift event import

Prerequisites

Before you can import raw event data, complete the following:

- Raw event export – before you can import data from Swrve into Redshift, you need to configure the raw events export. For more information, see Setting up raw data export.

- Download Redshift query tools – Redshift supports a variety of different clients. If you are just getting started, we recommend you download the following tools:

- SQL Workbench – free cross platform SQL query tool.

- Redshift JDBC Drivers – download the drivers and save to your computer.

- Create Redshift cluster – create a Redshift cluster in your AWS console by following these instructions. If you are just getting started, we recommend you create a cluster with one dc1.large instance.

Set up Redshift database

Next, you need to configure your Redshift cluster to receive data from Swrve.

To configure it, connect to your cluster with SQL Workbench. Run the following command to create your database if it does not already exist:

CREATE DATABASE swrve_data;

Then, create your events table. You will load event data from S3 into this table.

CREATE TABLE events ( app_version VARCHAR(255), event_type VARCHAR(255), event_time BIGINT, client_time BIGINT, event_user VARCHAR(255), parameters VARCHAR(65535), payload VARCHAR(65535) );

This table schema is associated with a jsonpaths file that is located at s3://swrve-cdn/redshift/jsonpaths-v1.txt.

More details on the connection between jsonpaths files and Redshift schemas can be found in the AWS documentation here.

Load event data into Redshift

Now it’s time to load data into the tables you just created. Event data is loaded with the Redshift COPY command.

Load the event data with the below COPY command. The AWS access key and secret key below need to have access to the swrveexternal-<company_name> bucket.

COPY events FROM 's3://swrveexternal-<company_name>/app-<app_id>/2020-01' CREDENTIALS 'aws_access_key_id=<your_aws_access_key_id>;aws_secret_access_key=<your_aws_secret_access_key>' GZIP JSON 's3://swrve-cdn/redshift/jsonpaths-v1.txt';

This copies from all files starting with 2020-01. Adjust this to import the dates you require. The speed of the COPY command depends on the number of nodes in your Redshift cluster and the amount of data being imported.

Validate data

With all of your data loaded into Redshift, you can now begin running queries. Some simple ideas for queries are below.

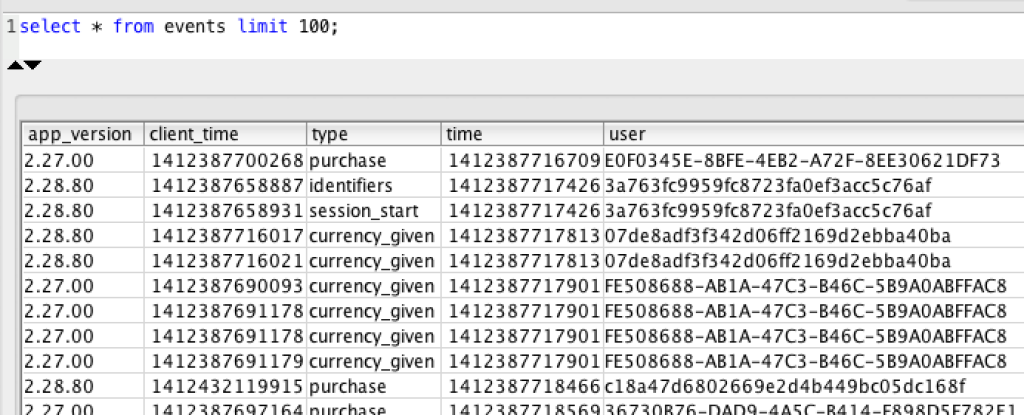

Events: This query shows how events are stored.

SELECT * FROM events LIMIT 100;

Each column maps to a parameter of our events API. Custom properties, such as user attributes, parameters and payloads, are loaded as JSON.

Next steps

After you load your data and are comfortable running simple queries, try running these best practice queries:

Most Swrve customers can self-host this pipeline; all you have to do is follow the steps in these tutorials. However, if you prefer a turn-key hosted solution, we do offer one as a professional service. There is an additional fee associated with this service. For more information, contact your CSM at support@swrve.com.

Swrve support can help you with basic setup and configuration of the pipeline described above. If you need help with your queries, contact our Data Services team at support@swrve.com. They will help you get the most out of your data with dedicated support, pre-built and custom reports, and dedicated data science hours.