A/B testing best practices

Below is a collection of resource A/B test best practices using the Swrve service.

Testing tutorials

Background: Your tutorial teaches your users how to use your app. A well-developed tutorial can help to retain users after install and is often the first area to focus on when trying to improve churn. Creating a funnel that represents your tutorial helps to identify the major areas of churn and can be used in conjunction with resource A/B testing. For example, you could perform a conversion A/B test to test a range of text improvements for a tutorial step that is currently causing a drop-off in users.

By getting your users through the tutorial successfully, you significantly increase your chances of retaining and monetizing those users. If your churn is considerably high, you should typically focus on improving your tutorial before trying to improve monetization of existing users. If you don’t already have a tutorial, you should create one, test it on a sub-set of your new users and compare the results.

Goal: Increase tutorial completion levels and new user retention.

Prerequisites:

- Create a funnel representing your tutorial. For more information about creating funnels in Swrve, see Funnels report.

- Identify the first major step in your funnel that leads to significant drop-off.

Test parameters: Create a resource that contains the text (for example) for that step and perform a conversion A/B test to test it (for example, change Hi there to Hello!).

Conversion event: Proceeding to the next step in your tutorial (if you’re testing step 3, then success is getting to step 4).

KPIs to measure:

- Day-1 Retention

- Day-3 Retention

- Day-5 Retention

- Day-7 Retention

- Revenue

- ARPU

For more information about each of these KPIs, see Intro to KPIs.

Swrve reporting tools:

- Funnels report. For more information, see Funnels report.

- Trend report with variant segments. For more information, see Trend reports.

Measuring success: There are several dimensions to look at when assessing the results of a test. Let’s assume you have have a test with 40% of all users in the control group and the other 60% in the variant.

The following is a list of things to look at to see how the test affected the overall performance of your app:

- Graph the revenue and DAU KPIs for each variant. Was there any change? Was the change beneficial?

- View the retention KPIs for users in each variant. Did the winning variant increase retention?

- View the funnel by each variant. Did addressing the tutorial step improve funnel progress?

Background: Virality is an important aspect of the success of an app or game. There are many ways to measure virality, such as counting the number of invites per users from within the app, presence in Twitter feeds, Facebook posts requesting items and so forth.

Because many social actions operate outside of what’s testable within your app, focus on those actions that can be tested directly within the app; for example, invites, installs, and requests to play with friends.

If your app has two buttons (one to play alone and one with a friend), a good test is to change the text or image of the ‘play with a friend’ button. If there is an option to invite Facebook friends, you may want to test whether users are more willing to invite others if the list is populated with existing friends (an active invite) versus having them select those friends manually (a passive invite).

Goal: Increase the triggering of social events and increase new users in channels of virality.

Prerequisites:

- Identify the events in your app that have a social aspect. The aim is to test the call to action on these events to try to encourage more social behavior.

- If there is a series of steps to invite a friend or challenge an opponent, create a funnel to represent these steps so that you can see where users opt out.

Test parameters: Create a resource for the ‘play with a friend’ button with relevant attributes (such as button location, color and/or size) and perform a conversion A/B test to test it.

Conversion event: The social action (such as invite friend or share on Twitter).

KPIs to measure:

- Conversion

- New Users

- Day-7 Retention

For more information about each of these KPIs, see Intro to KPIs.

Swrve reporting tools:

- Trend report with segments (for example, create a segment for that channel of virality; for example, Facebook installs). For more information, see Trend reports.

- Events report. For more information, see Events report.

- Funnels report (create a funnel representing the social process, if applicable). For more information, see Funnels report.

- User acquisition report. For more information, see User acquisition report.

Measuring success: A successful test increases the number of times a social event is triggered. If you are testing the effectiveness of users to generate traffic, you want to see a higher new user KPI from the channel of virality. You can also use the user acquisition report to track installs per user acquisition channel.

Testing the start-up configuration

Background: The start-up configuration determines the amount of resources with which a user starts. One configuration could determine the starting currency. Apps often give players some premium currency to get them used to spending it. These premium currencies are often used in the tutorial to teach users how to complete jobs quickly or purchase premium items in order to set a precedent for future purchases. Giving users a set amount of free premium currency may keep those users around long enough to become invested in the app. This investment in the app might then cause them to spend real money to continue their experience.

Another configuration may represent experience points (XP) to reach the next level. Reducing the amount of XP to level up may give users a sense of achievement. This can also be applied down the road to later levels where users may be dropping off. If it’s too difficult to advance to level 40, you may want to try testing a lower threshold to see if that encourages users to stick around.

Goal: Increase monetization or retention, or both.

Test parameters: Create a resource representing the start-up configuration of your game then perform a conversion A/B test to test it. The variables for the variant group(s) should be significantly different. For example, changing 50 premium gems to 51 gems is not likely to result in a very good test, but changing 5 premium gems to 10 gems might.

Conversion event: The IAP event if you’re expecting users to purchase additional currency.

KPIs to measure:

- ARPU

- DAU

- Currency Spent

- Day-1 Retention

- Day-3 Retention

- Day-7 Retention

For more information about each of these KPIs, see Intro to KPIs.

Swrve reporting tools:

- Funnels report. For more information, see Funnels report.

- Events report (Level-up event). For more information, see Events report.

- Trend report with segments. For more information, see Trend reports.

Measuring success: A successful test increases retention and/or monetization. If you create a test that gives additional currency to users in the app, they should stick around longer or spend real world money. If you make it easier for users to level up, ARPU should not decrease as a result.

Testing the FTE

Background: The FTE (First Time user Experience) involves more than just the tutorial; it begins with any dialogue or interactive screens that a user encounters before they even begin to learn to use the app. Forcing users to click through too many screens or enter too much information may ultimately cause that user to leave your app. Too many choices can sometimes result in churn.

Omitting or simplifying steps in the FTE and testing its impact on retention and successful tutorial completion can benefit your app in the long run. For example, forcing a user to enter a Facebook ID before they can use your app may turn them away. But giving them the option to skip it may keep them around.

Goal: Remove obstacles in your onboarding flow that are making users churn. Identify the path of least resistance to retention and monetization.

Prerequisites:

- Create a funnel representing your initial FTE.

- Identify the first major step in your FTE where drop-off occurs.

- Create a new funnel representing your projected FTE (that is, without the logon step).

Conversion event: Proceeding to the next step in your FTE (if you’re testing removal of the start screen, then success might be getting users to start the tutorial).

KPIs to measure:

- Day-1 Retention

- Day-3 Retention

- Day-7 Retention

For more information about each of these KPIs, see Intro to KPIs.

Swrve reporting tools:

- Funnels report. For more information, see Funnels report.

- Trend report with segments. For more information, see Trend Reports.

Measuring success: A successful test results in more users engaging in your tutorial, customizing their profile or simply using the app past launch.

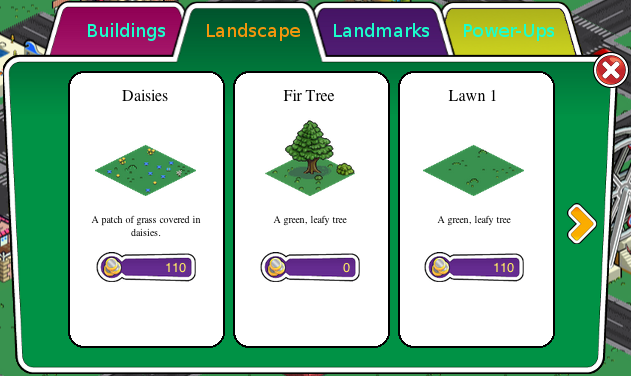

Testing the app economy

Background: It’s difficult to guess what your users are willing to pay for (with real or virtual currency). With Swrve, you can identify the sweet spot of a particular consumable. By getting users to spend more of their hard-earned currency, you may increase the perceived value of that currency.

Typically, if a user has too much virtual currency at hand, they may avoid grinding or purchasing additional currency. This may also have an effect on playtime or usage time (why spend 20 minutes a day farming if you have all the money you need?). Inversely, if a user finds items cost too much, they may be discouraged from grinding or purchasing currency (why grind for coins if coins can’t even buy me the items I need to advance?).

Goal: Increase virtual or real currency spending without negatively affecting ARPU or retention.

Prerequisites:

- Identify the top selling item(s) in your app.

- Determine the impact of testing a particular item. Will this test upset my user base? Should I exclude my paying users? Will existing users have biases and skew results?

Conversion event: The purchase event (for virtual currency) or the IAP event (for real currency) for the item being tested.

KPIs to measure:

- Day-1 Retention

- Day-3 Retention

- Day-7 Retention

For more information about each of these KPIs, see Intro to KPIs.

Swrve reporting tools:

- Trend report. For more information, see Trend reports.

- Item charts report. For more information, see Item charts report.

- Top items report. For more information, see Top items report.

Measuring success: A successful test results in users spending more currency, whether it’s by lowering prices, changing currency types or rewarding more items. If currency spending increases, be sure to check that ARPU hasn’t lowered significantly and that retention is still comparable. Lowering the price of an item may sometimes result in an increase in virtual currency spending but a decrease in real money spending.

Testing unlocking content

This A/B test is useful if you want to create a resource A/B test to determine whether you should unlock particular game content at particular levels.

Example scenario

Lucky Gem Casino has 30 slots to unlock, which occur at different user levels according to earning experience. The aim of the proposed A/B test is to test whether it makes sense to increase 7-day retention by unlocking the Monopoly Slot in level 2 or in level 3 for a user.

Although this seems like a good test to run, the problem arises when you want to declare a winning variant. If variant A (where users unlock content later in their XP level) is the winning variant, and you then want to push that variant to the rest of the audience, it could be the case that users in variant B, who were happily playing the game, now find it locked.

Recommendation

Avoid using Swrve for these kinds of tests unless the game technology has a way to dynamically update the game spec for individual users or segments of users. If the game does not have the ability to do this, Swrve recommends using low numbers in these types of tests and directly messaging users to inform them that you plan to optimize their game experience so there could be periodic changes to their gameplay. If impacted users complain, compensate them with free soft currency and migrate them to the level where they can receive the content if a change is made.

Using segmented A/B tests

Segmented A/B tests are a great way to run a test on a specific group of app users, such as high-spenders. The following is a guide of best practices on segmented A/B testing.

New users

When you create a resource A/B test using segments, users who are new to your app do not qualify for the test on launch because they are not yet a member of the segment. However the Swrve SDKs immediately make a second call for Resources so it takes about five seconds for a user to qualify for a segment, and thus a segmented test.

Examples of segments to use in A/B Tests

- Testing the storefront on paying users.

- Decreasing the difficulty for non-paying users.

Checking in the winner

When you check a test in, the winner is deployed to all users and not just the segment you selected. So, if your aim is to roll out a change to just paying users, you must create a 100% variant deployment test.

100% variant deployment

When you deploy a 100% variant test to the user population, all users in that segment receive that A/B test.

If the segment is dynamic (a user can enter and leave), it becomes difficult to manage when the test should stop serving A/B test resources to the user. By default, the test serves the changes indefinitely to users who enter the test. This means that as soon as the user leaves the segment (that is, a non-payer becomes a payer), they are still latched onto the test and will continue to receive the A/B test resources.

Currently, Swrve doesn’t have a deployment system for serving A/B test resources dynamically (that is, for changing the A/B test resources served to users based on their segment).

Examples of segments to use for 100% deployment

- Has spent money (payers)

- User has reached level 3 or greater

- A user has logged on to Facebook once

Examples of segments to avoid for 100% deployment

- Level 3

- Non-payer (they may become a payer at some point)

- iPad Users (a user may be using multiple devices)

Next steps

- Find more information about resource A/B tests. For more information, see Intro to resource A/B testing.

- Create a new resource A/B test. For more information, see Creating resource A/B tests.